Merged in feat/sw-2333-package-and-sas-i18n (pull request #2538)

feat(SW-2333): I18n for multiple apps and packages * Set upp i18n in partner-sas * Adapt lokalise workflow to monorepo * Fix layout props Approved-by: Linus Flood

This commit is contained in:

@@ -294,133 +294,3 @@ This was inspired by [server-only-context](https://github.com/manvalls/server-on

|

||||

defaultMessage: "This is a message",

|

||||

})

|

||||

```

|

||||

|

||||

### Integration with Lokalise

|

||||

|

||||

> For more information read about [the workflow below](#markdown-header-the-workflow).

|

||||

|

||||

#### Message extraction from codebase

|

||||

|

||||

Extracts the messages from calls to `intl.formatMessage()` and other supported methods on `intl`.

|

||||

|

||||

Running the following command will generate a JSON file at `./i18n/tooling/extracted.json`. The format of this file is for consumption by Lokalise. This JSON file is what gets uploaded to Lokalise.

|

||||

|

||||

```bash

|

||||

yarn workspace @scandic-hotels/scandic-web i18n:extract

|

||||

```

|

||||

|

||||

#### Checking for changes between codebase and Lokalise

|

||||

|

||||

> _NOTE_: Diff only considers the English language.

|

||||

|

||||

It is recommended to download the latest labels from Lokalise to make sure you have the latest before diffing. See below.

|

||||

|

||||

- Run the message extraction above.

|

||||

- Run `yarn workspace @scandic-hotels/scandic-web i18n:diff`

|

||||

|

||||

#### Message upload to Lokalise

|

||||

|

||||

Set the environment variable `LOKALISE_API_KEY` to the API key for Lokalise.

|

||||

|

||||

Running the following command will upload the JSON file, that was generated by extraction, to Lokalise.

|

||||

|

||||

It supports the different upload phases from Lokalise meaning that once this command completes the messages are available for translation in Lokalise.

|

||||

|

||||

```bash

|

||||

yarn workspace @scandic-hotels/scandic-web i18n:upload

|

||||

```

|

||||

|

||||

#### Message download from Lokalise

|

||||

|

||||

Set the environment variable `LOKALISE_API_KEY` to the API key for Lokalise.

|

||||

|

||||

Running the following command will download the translated assets from Lokalise to your local working copy.

|

||||

|

||||

_DOCUMENTATION PENDING FOR FULL WORKFLOW._

|

||||

|

||||

```bash

|

||||

yarn workspace @scandic-hotels/scandic-web i18n:download

|

||||

```

|

||||

|

||||

#### Message compilation

|

||||

|

||||

Compiles the assets that were downloaded from Lokalise into the dictionaries used by the codebase.

|

||||

|

||||

_DOCUMENTATION PENDING FOR FULL WORKFLOW._

|

||||

|

||||

```bash

|

||||

yarn workspace @scandic-hotels/scandic-web i18n:compile

|

||||

```

|

||||

|

||||

#### Convenience script targets

|

||||

|

||||

Extract and upload: `yarn workspace @scandic-hotels/scandic-web i18n:push`

|

||||

Download and compile: `yarn workspace @scandic-hotels/scandic-web i18n:pull`

|

||||

Extract, upload, download and compile (push && pull): `yarn workspace @scandic-hotels/scandic-web i18n:sync`

|

||||

|

||||

### The workflow

|

||||

|

||||

We use the following technical stack to handle translations of UI labels.

|

||||

|

||||

- [react-intl](https://formatjs.io/docs/getting-started/installation/): Library for handling translations in the codebase.

|

||||

- [Lokalise](https://lokalise.com/): TMS (Translations Management System) for handling the translations from the editor side.

|

||||

|

||||

A translation is usually called a "message" in the context of i18n with react-intl.

|

||||

|

||||

In the codebase we use the [Imperative API](https://formatjs.github.io/docs/react-intl/api/) of react-intl. This allows us to use the same patterns and rules regardless of where we are formatting messages (JSX, data, utilities, etc). We do not use the [React components](https://formatjs.github.io/docs/react-intl/components/) of react-intl for the same reason, they would only work in JSX and would possibly differ in implementation and patterns with other parts of the code.

|

||||

|

||||

To define messages we primarily invoke `intl.formatMessage` (but `intl` has other methods for other purposes too!). We take care not to name the message, we do that by **not** passing the `id` attribute to `formatMessage`. The reason for this is that we also have implemented the [@formatjs/cli](https://formatjs.io/docs/tooling/cli) and the SWC plugin. Due to the SWC plugin being a fairly new project and also due to version mismatching reasons, we are using a pinned version of the SWC plugin. Once we upgrade to Next.js 15 we can upgrade the SWC plugin too and skip pinning it. Together, these two are responsible for allowing us to extract defined messages in our codebase. This optimizes the developer workflow by freeing up developers from having to name things and to not be wary of duplicates/collisions as they will be handled by the extraction tool and Lokalise.

|

||||

|

||||

Example of a simple message:

|

||||

|

||||

```typescript

|

||||

const myMessage = intl.formatMessage({

|

||||

defaultMessage: "Hello from the docs!",

|

||||

})

|

||||

```

|

||||

|

||||

In cases where extra information is helpful to the translators, e.g. short sentences which are hard to translate without context or we are dealing with homographs (words that are spelled the same but have different meanings), we can also specify a `description` key in the `formatMessage` call. This allows the tooling to extract all the different permutations of the declared message along with their respective descriptions. The same sentence/word will show up multiple times in Lokalise with different contexts, allowing them to be translated indivudually. The description is intended to assist translators using Lokalise by providing context or additional information. The value is an object with the following structure:

|

||||

|

||||

```typescript

|

||||

description = string | {

|

||||

context?: string // natural language string providing context for translators in Lokalise (optional)

|

||||

limit?: number // character limit for the key enforced by Lokalise (optional)

|

||||

tags?: string // comma separated string (optional)

|

||||

}

|

||||

```

|

||||

|

||||

Examples of a homograph with different context:

|

||||

|

||||

```typescript

|

||||

const myMessage1 = intl.formatMessage({

|

||||

defaultMessage: "Book",

|

||||

description: "The action to reserve a room",

|

||||

})

|

||||

const myMessage2 = intl.formatMessage({

|

||||

defaultMessage: "Book",

|

||||

description: "A physical book that you can read",

|

||||

})

|

||||

```

|

||||

|

||||

Examples with a (contrived) sentence:

|

||||

|

||||

```typescript

|

||||

const myMessage1 = intl.formatMessage({

|

||||

defaultMessage: "He gave her a ring!",

|

||||

description: "A man used a phone to call a woman",

|

||||

})

|

||||

const myMessage2 = intl.formatMessage({

|

||||

defaultMessage: "He gave her a ring!",

|

||||

description: "A man gave a woman a piece of jewelry",

|

||||

})

|

||||

```

|

||||

|

||||

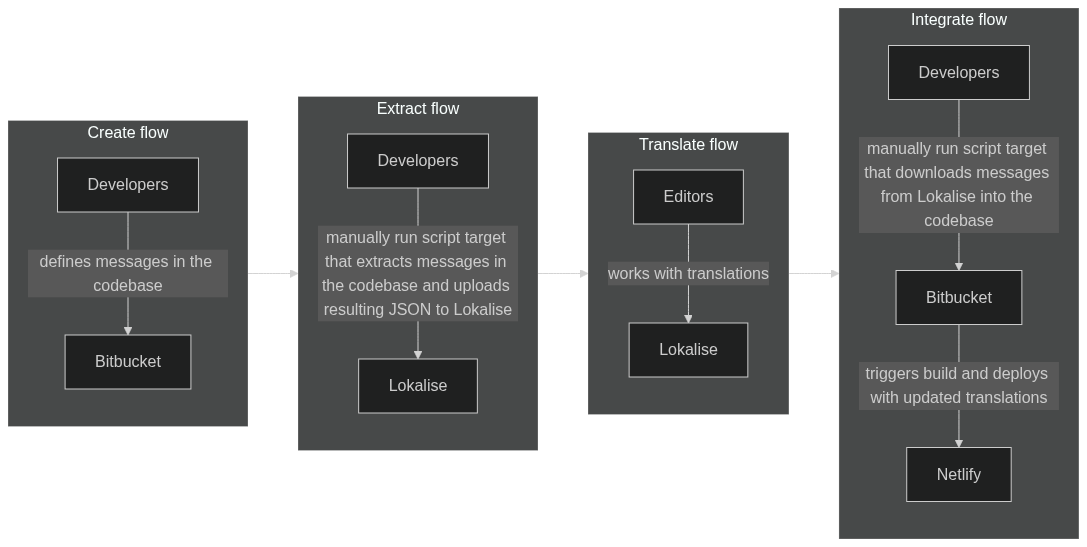

#### Diagram

|

||||

|

||||

A diagram showing the high level workflow of translations. It is currently a manual process syncing messages to and from Lokalise into the codebase.

|

||||

|

||||

[](https://mermaid.live/edit#pako:eNqdVM1u2zAMfhVC5_YFcthhaw8bug5Ye2rcA2PRthBZMiRqWVD03UfFipwtbVBMF1HkJ_Ljj_SiWq9JrVRn_a4dMDDc_Wxc40BWTJs-4DRAGwiZYP1l3jP2eYbkdUO_yPqJQpzt60YtqkY9w_U1aOqMowgjxYi9CMYBDwQ5-gYjCeYTfDa8Se2WuPqpGnEzBySnz-jRbw7YMqxvi_AuwQJ4i-GILqG1ewjJQWyDmRgYQ0-yDcjHIBdSQKchTdajjhAoJsvG9fDt4cc9sIc7v0VrSqbHw8LnqLmYqIBdtIdWPFbxn2RvtWEfYrWL76Iqie582EbYGV78Ge_iX7xOb3-ImXFMImVmX6v4bhsq5H8aof3OzUWuneiCH5cCCxd_YbhOg5_PV17n0MyLg-l7oQmbZKw-dFuTtHtfipkmLUh9XtR7Ymu6_WnconqrpOWt5Ytl5AqkzHY21DmYTctYZGNtxWxUV2qkMKLR8spfsq5RUpxR-rkSUWPYNqpxr4LDxP5h71q14pDoSgWf-kGtOrRRTnN-NwbF-Vi1E7on75czHWbt-_ypHP6W1z--V4Yq)

|

||||

|

||||

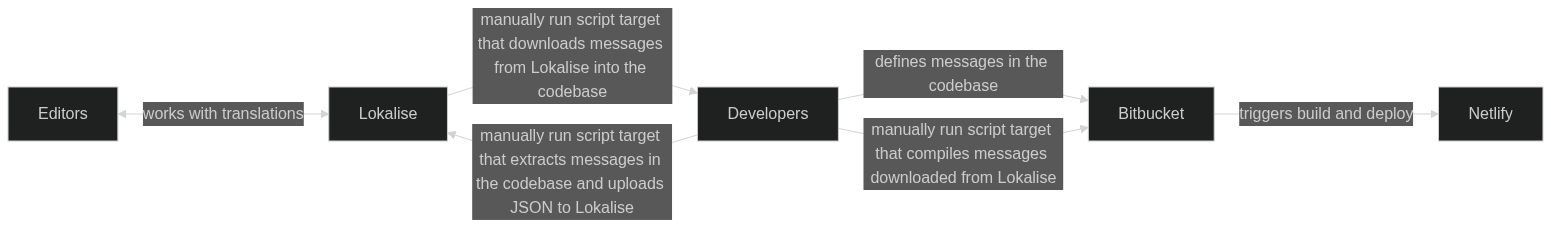

The following is a diagram showing how all the parts interact with each other. Note that interacting with Lokalise in any way does **NOT** trigger a build and deploy. A manual action by a developer is required to deploy the latest translations from Lokalise. (Once the manual process reaches maturity we might try and automate it)

|

||||

|

||||

[](https://mermaid.live/edit#pako:eNqdUsFqwzAM_RXh8_IDYewwusvoOlhvIxc1VhITxwq2vCyU_vuchKVlLRvMF0uypaf3eEdVsiaVq8ryUDboBbZvhYN0nrQR9gGyDAb2bYDBSAPi0QWLYtgFuM-yB9hyi9YEWro29EGWe1oaNVXGUYCOQsA6BcaBNAQT6AEDwTTg0cghli3JrQkduojWjuCjg1B60wsI-prS1aAAfaaNSvkFAp2G2FtGHeB5_7oD4XVnuCZw8fQnuObBLYNX9Mpzd55hXAK7Inxm-B_GJXe9sZeifq9B-gf8DXXXdIISb-p6gj1EY_WslKYk1Th37kisqcalT92pjnyHRiezHKdaoRKxjgqVp1CjbwtVuFP6h1F4P7pS5eIj3SnPsW5UXqENKYu9RqGNwdpjt1Z7dO_M55xm870s3pwtevoCSUvs9A)

|

||||

|

||||

@@ -1,57 +0,0 @@

|

||||

import { stdin as input, stdout as output } from "node:process"

|

||||

import * as readline from "node:readline/promises"

|

||||

|

||||

import { config } from "dotenv"

|

||||

|

||||

const rl = readline.createInterface({ input, output })

|

||||

|

||||

config({ path: `${process.cwd()}/.env.local` })

|

||||

|

||||

function diffArray(json1, json2) {

|

||||

const diff = []

|

||||

const keys1 = Object.keys(json1)

|

||||

const keys2 = Object.keys(json2)

|

||||

|

||||

keys1.forEach((key) => {

|

||||

if (!keys2.includes(key)) {

|

||||

diff.push(key)

|

||||

}

|

||||

})

|

||||

|

||||

return diff

|

||||

}

|

||||

|

||||

async function main() {

|

||||

const answer = await rl.question(

|

||||

"To make sure we use the latest data for the diff, have you run i18n:download AND i18n:extract BEFORE running this? Type yes or no "

|

||||

)

|

||||

|

||||

if (answer !== "yes") {

|

||||

console.log("")

|

||||

console.warn(

|

||||

"Please run i18n:download AND i18n:extract BEFORE running this."

|

||||

)

|

||||

rl.close()

|

||||

process.exit(1)

|

||||

}

|

||||

rl.close()

|

||||

|

||||

const allLokalise = await import("./translations-all/en.json", {

|

||||

with: {

|

||||

type: "json",

|

||||

},

|

||||

})

|

||||

const fromCodebase = await import("./extracted.json", {

|

||||

with: {

|

||||

type: "json",

|

||||

},

|

||||

})

|

||||

|

||||

const labelsToRemove = diffArray(allLokalise, fromCodebase)

|

||||

|

||||

const { deleteBulk } = await import("./lokalise")

|

||||

|

||||

await deleteBulk(labelsToRemove)

|

||||

}

|

||||

|

||||

main()

|

||||

@@ -1,70 +0,0 @@

|

||||

import filteredLokalise from "./translations/en.json" with { type: "json" }

|

||||

import allLokalise from "./translations-all/en.json" with { type: "json" }

|

||||

import fromCodebase from "./extracted.json" with { type: "json" }

|

||||

|

||||

function diffArray(json1, json2) {

|

||||

const diff = []

|

||||

const keys1 = Object.keys(json1)

|

||||

const keys2 = Object.keys(json2)

|

||||

|

||||

keys1.forEach((key) => {

|

||||

if (!keys2.includes(key)) {

|

||||

diff.push(key)

|

||||

}

|

||||

})

|

||||

|

||||

return diff

|

||||

}

|

||||

|

||||

function resolveLabels(ids, arr) {

|

||||

return ids.map((id) => {

|

||||

return {

|

||||

id,

|

||||

...arr[id],

|

||||

}

|

||||

})

|

||||

}

|

||||

|

||||

const labelsHidden = diffArray(allLokalise, filteredLokalise)

|

||||

const labelsToRemove = diffArray(filteredLokalise, fromCodebase)

|

||||

const labelsToAdd = diffArray(fromCodebase, filteredLokalise).filter(

|

||||

(key) => !labelsHidden.includes(key)

|

||||

)

|

||||

|

||||

if (labelsToRemove.length === 0 && labelsToAdd.length === 0) {

|

||||

console.log(`Nothing has changed!`)

|

||||

} else {

|

||||

console.log(`Labels to REMOVE from Lokalise: ${labelsToRemove.length}`)

|

||||

console.log(`Labels to ADD to Lokalise: ${labelsToAdd.length}`)

|

||||

console.log(`Labels HIDDEN in Lokalise: ${labelsHidden.length}`)

|

||||

console.log("")

|

||||

}

|

||||

|

||||

if (labelsToRemove.length) {

|

||||

console.log(`${labelsToRemove.length} labels to remove from Lokalise:`)

|

||||

console.table(resolveLabels(labelsToRemove, filteredLokalise))

|

||||

console.log("")

|

||||

}

|

||||

|

||||

if (labelsToAdd.length) {

|

||||

console.log("")

|

||||

console.log(`${labelsToAdd.length} labels to add to Lokalise`)

|

||||

console.table(resolveLabels(labelsToAdd, fromCodebase))

|

||||

console.log("")

|

||||

}

|

||||

|

||||

if (labelsHidden.length) {

|

||||

console.log("")

|

||||

console.log(`${labelsHidden.length} labels are hidden in Lokalise`)

|

||||

console.table(resolveLabels(labelsHidden, allLokalise))

|

||||

console.log("")

|

||||

}

|

||||

|

||||

if (labelsToRemove.length === 0 && labelsToAdd.length === 0) {

|

||||

console.log(`Nothing has changed!`)

|

||||

} else {

|

||||

console.log(`Labels to REMOVE from Lokalise: ${labelsToRemove.length}`)

|

||||

console.log(`Labels to ADD to Lokalise: ${labelsToAdd.length}`)

|

||||

console.log(`Labels HIDDEN in Lokalise: ${labelsHidden.length}`)

|

||||

console.log("")

|

||||

}

|

||||

@@ -1,16 +0,0 @@

|

||||

import path from "node:path"

|

||||

|

||||

import { config } from "dotenv"

|

||||

|

||||

config({ path: `${process.cwd()}/.env.local` })

|

||||

|

||||

const filteredExtractPath = path.resolve(__dirname, "translations")

|

||||

const allExtractPath = path.resolve(__dirname, "translations-all")

|

||||

|

||||

async function main() {

|

||||

const { download } = await import("./lokalise")

|

||||

await download(filteredExtractPath, false)

|

||||

await download(allExtractPath, true)

|

||||

}

|

||||

|

||||

main()

|

||||

@@ -1,10 +0,0 @@

|

||||

// Run the formatter.ts through Jiti

|

||||

|

||||

import { fileURLToPath } from "node:url"

|

||||

|

||||

import createJiti from "jiti"

|

||||

|

||||

const formatter = createJiti(fileURLToPath(import.meta.url))("./formatter.ts")

|

||||

|

||||

export const format = formatter.format

|

||||

export const compile = formatter.compile

|

||||

@@ -1,101 +0,0 @@

|

||||

// https://docs.lokalise.com/en/articles/3229161-structured-json

|

||||

|

||||

import { logger } from "@scandic-hotels/common/logger"

|

||||

|

||||

import type { LokaliseMessageDescriptor } from "@/types/intl"

|

||||

|

||||

type TranslationEntry = {

|

||||

translation: string

|

||||

notes?: string

|

||||

context?: string

|

||||

limit?: number

|

||||

tags?: string[]

|

||||

}

|

||||

|

||||

type CompiledEntries = Record<string, string>

|

||||

|

||||

type LokaliseStructuredJson = Record<string, TranslationEntry>

|

||||

|

||||

export function format(

|

||||

msgs: LokaliseMessageDescriptor[]

|

||||

): LokaliseStructuredJson {

|

||||

const results: LokaliseStructuredJson = {}

|

||||

for (const [id, msg] of Object.entries(msgs)) {

|

||||

const { defaultMessage, description } = msg

|

||||

|

||||

if (typeof defaultMessage === "string") {

|

||||

const entry: TranslationEntry = {

|

||||

translation: defaultMessage,

|

||||

}

|

||||

|

||||

if (description) {

|

||||

if (typeof description === "string") {

|

||||

logger.warn(

|

||||

`Unsupported type for description, expected 'object', got ${typeof context}. Skipping!`,

|

||||

msg

|

||||

)

|

||||

} else {

|

||||

const { context, limit, tags } = description

|

||||

|

||||

if (context) {

|

||||

if (typeof context === "string") {

|

||||

entry.context = context

|

||||

} else {

|

||||

logger.warn(

|

||||

`Unsupported type for context, expected 'string', got ${typeof context}`,

|

||||

msg

|

||||

)

|

||||

}

|

||||

}

|

||||

|

||||

if (limit) {

|

||||

if (limit && typeof limit === "number") {

|

||||

entry.limit = limit

|

||||

} else {

|

||||

logger.warn(

|

||||

`Unsupported type for limit, expected 'number', got ${typeof limit}`,

|

||||

msg

|

||||

)

|

||||

}

|

||||

}

|

||||

|

||||

if (tags) {

|

||||

if (tags && typeof tags === "string") {

|

||||

const tagArray = tags.split(",").map((s) => s.trim())

|

||||

if (tagArray.length) {

|

||||

entry.tags = tagArray

|

||||

}

|

||||

} else {

|

||||

logger.warn(

|

||||

`Unsupported type for tags, expected Array, got ${typeof tags}`,

|

||||

msg

|

||||

)

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

results[id] = entry

|

||||

} else {

|

||||

logger.warn(

|

||||

`Skipping message, unsupported type for defaultMessage, expected string, got ${typeof defaultMessage}`,

|

||||

{

|

||||

id,

|

||||

msg,

|

||||

}

|

||||

)

|

||||

}

|

||||

}

|

||||

|

||||

return results

|

||||

}

|

||||

|

||||

export function compile(msgs: LokaliseStructuredJson): CompiledEntries {

|

||||

const results: CompiledEntries = {}

|

||||

|

||||

for (const [id, msg] of Object.entries(msgs)) {

|

||||

results[id] = msg.translation

|

||||

}

|

||||

|

||||

return results

|

||||

}

|

||||

@@ -1,268 +0,0 @@

|

||||

import fs from "node:fs/promises"

|

||||

import { performance, PerformanceObserver } from "node:perf_hooks"

|

||||

|

||||

import { LokaliseApi } from "@lokalise/node-api"

|

||||

import AdmZip from "adm-zip"

|

||||

|

||||

import { createLogger } from "@scandic-hotels/common/logger/createLogger"

|

||||

|

||||

const projectId = "4194150766ff28c418f010.39532200"

|

||||

const lokaliseApi = new LokaliseApi({ apiKey: process.env.LOKALISE_API_KEY })

|

||||

|

||||

const lokaliseLogger = createLogger("lokalise")

|

||||

|

||||

let resolvePerf: (value?: unknown) => void

|

||||

const performanceMetrics = new Promise((resolve) => {

|

||||

resolvePerf = resolve

|

||||

})

|

||||

|

||||

const perf = new PerformanceObserver((items) => {

|

||||

const entries = items.getEntries()

|

||||

for (const entry of entries) {

|

||||

if (entry.name === "done") {

|

||||

// This is the last measure meant for clean up

|

||||

performance.clearMarks()

|

||||

perf.disconnect()

|

||||

if (typeof resolvePerf === "function") {

|

||||

resolvePerf()

|

||||

}

|

||||

} else {

|

||||

lokaliseLogger.info(

|

||||

`[metrics] ${entry.name} completed in ${entry.duration} ms`

|

||||

)

|

||||

}

|

||||

}

|

||||

performance.clearMeasures()

|

||||

})

|

||||

|

||||

async function waitUntilUploadDone(processId: string) {

|

||||

return new Promise<void>((resolve, reject) => {

|

||||

const interval = setInterval(async () => {

|

||||

try {

|

||||

performance.mark("waitUntilUploadDoneStart")

|

||||

|

||||

lokaliseLogger.debug("Checking upload status...")

|

||||

|

||||

performance.mark("getProcessStart")

|

||||

const process = await lokaliseApi.queuedProcesses().get(processId, {

|

||||

project_id: projectId,

|

||||

})

|

||||

performance.mark("getProcessEnd")

|

||||

performance.measure(

|

||||

"Get Queued Process",

|

||||

"getProcessStart",

|

||||

"getProcessEnd"

|

||||

)

|

||||

|

||||

lokaliseLogger.debug(`Status: ${process.status}`)

|

||||

|

||||

if (process.status === "finished") {

|

||||

clearInterval(interval)

|

||||

performance.mark("waitUntilUploadDoneEnd", { detail: "success" })

|

||||

performance.measure(

|

||||

"Wait on upload",

|

||||

"waitUntilUploadDoneStart",

|

||||

"waitUntilUploadDoneEnd"

|

||||

)

|

||||

resolve()

|

||||

} else if (process.status === "failed") {

|

||||

throw process

|

||||

}

|

||||

} catch (e) {

|

||||

clearInterval(interval)

|

||||

lokaliseLogger.error("An error occurred:", e)

|

||||

performance.mark("waitUntilUploadDoneEnd", { detail: e })

|

||||

performance.measure(

|

||||

"Wait on upload",

|

||||

"waitUntilUploadDoneStart",

|

||||

"waitUntilUploadDoneEnd"

|

||||

)

|

||||

reject()

|

||||

}

|

||||

}, 1000)

|

||||

})

|

||||

}

|

||||

|

||||

export async function upload(filepath: string) {

|

||||

perf.observe({ type: "measure" })

|

||||

|

||||

try {

|

||||

lokaliseLogger.debug(`Uploading ${filepath}...`)

|

||||

|

||||

performance.mark("uploadStart")

|

||||

|

||||

performance.mark("sourceFileReadStart")

|

||||

const data = await fs.readFile(filepath, "utf8")

|

||||

const buff = Buffer.from(data, "utf8")

|

||||

const base64 = buff.toString("base64")

|

||||

performance.mark("sourceFileReadEnd")

|

||||

performance.measure(

|

||||

"Read source file",

|

||||

"sourceFileReadStart",

|

||||

"sourceFileReadEnd"

|

||||

)

|

||||

|

||||

performance.mark("lokaliseUploadInitStart")

|

||||

const bgProcess = await lokaliseApi.files().upload(projectId, {

|

||||

data: base64,

|

||||

filename: "en.json",

|

||||

lang_iso: "en",

|

||||

detect_icu_plurals: true,

|

||||

format: "json",

|

||||

convert_placeholders: true,

|

||||

replace_modified: true,

|

||||

})

|

||||

performance.mark("lokaliseUploadInitEnd")

|

||||

performance.measure(

|

||||

"Upload init",

|

||||

"lokaliseUploadInitStart",

|

||||

"lokaliseUploadInitEnd"

|

||||

)

|

||||

|

||||

performance.mark("lokaliseUploadStart")

|

||||

await waitUntilUploadDone(bgProcess.process_id)

|

||||

performance.mark("lokaliseUploadEnd")

|

||||

performance.measure(

|

||||

"Upload transfer",

|

||||

"lokaliseUploadStart",

|

||||

"lokaliseUploadEnd"

|

||||

)

|

||||

|

||||

lokaliseLogger.debug("Upload successful")

|

||||

} catch (e) {

|

||||

lokaliseLogger.error("Upload failed", e)

|

||||

} finally {

|

||||

performance.mark("uploadEnd")

|

||||

|

||||

performance.measure("Upload operation", "uploadStart", "uploadEnd")

|

||||

}

|

||||

|

||||

performance.measure("done")

|

||||

|

||||

await performanceMetrics

|

||||

}

|

||||

|

||||

export async function download(extractPath: string, all: boolean = false) {

|

||||

perf.observe({ type: "measure" })

|

||||

|

||||

try {

|

||||

lokaliseLogger.debug(

|

||||

all

|

||||

? "Downloading all translations..."

|

||||

: "Downloading filtered translations..."

|

||||

)

|

||||

|

||||

performance.mark("downloadStart")

|

||||

|

||||

performance.mark("lokaliseDownloadInitStart")

|

||||

const downloadResponse = await lokaliseApi.files().download(projectId, {

|

||||

format: "json_structured",

|

||||

indentation: "2sp",

|

||||

placeholder_format: "icu",

|

||||

plural_format: "icu",

|

||||

icu_numeric: true,

|

||||

bundle_structure: "%LANG_ISO%.%FORMAT%",

|

||||

directory_prefix: "",

|

||||

filter_data: all ? [] : ["translated", "nonhidden"],

|

||||

export_empty_as: "skip",

|

||||

})

|

||||

performance.mark("lokaliseDownloadInitEnd")

|

||||

performance.measure(

|

||||

"Download init",

|

||||

"lokaliseDownloadInitStart",

|

||||

"lokaliseDownloadInitEnd"

|

||||

)

|

||||

|

||||

const { bundle_url } = downloadResponse

|

||||

|

||||

performance.mark("lokaliseDownloadStart")

|

||||

const bundleResponse = await fetch(bundle_url)

|

||||

performance.mark("lokaliseDownloadEnd")

|

||||

performance.measure(

|

||||

"Download transfer",

|

||||

"lokaliseDownloadStart",

|

||||

"lokaliseDownloadEnd"

|

||||

)

|

||||

|

||||

if (bundleResponse.ok) {

|

||||

performance.mark("unpackTranslationsStart")

|

||||

const arrayBuffer = await bundleResponse.arrayBuffer()

|

||||

const buffer = Buffer.from(new Uint8Array(arrayBuffer))

|

||||

const zip = new AdmZip(buffer)

|

||||

zip.extractAllTo(extractPath, true)

|

||||

performance.mark("unpackTranslationsEnd")

|

||||

performance.measure(

|

||||

"Unpacking translations",

|

||||

"unpackTranslationsStart",

|

||||

"unpackTranslationsEnd"

|

||||

)

|

||||

|

||||

lokaliseLogger.debug("Download successful")

|

||||

} else {

|

||||

throw bundleResponse

|

||||

}

|

||||

} catch (e) {

|

||||

lokaliseLogger.error("Download failed", e)

|

||||

} finally {

|

||||

performance.mark("downloadEnd")

|

||||

|

||||

performance.measure("Download operation", "downloadStart", "downloadEnd")

|

||||

}

|

||||

|

||||

performance.measure("done")

|

||||

|

||||

await performanceMetrics

|

||||

}

|

||||

|

||||

export async function deleteBulk(keyNames: string[]) {

|

||||

perf.observe({ type: "measure" })

|

||||

|

||||

try {

|

||||

performance.mark("bulkDeleteStart")

|

||||

|

||||

let keysToDelete: number[] = []

|

||||

let cursor: string | undefined = undefined

|

||||

let hasNext = true

|

||||

do {

|

||||

const keys = await lokaliseApi.keys().list({

|

||||

project_id: projectId,

|

||||

limit: 100,

|

||||

pagination: "cursor",

|

||||

cursor,

|

||||

})

|

||||

|

||||

cursor = keys.nextCursor ?? undefined

|

||||

keys.items.forEach((key) => {

|

||||

if (key.key_id && key.key_name.web) {

|

||||

if (keyNames.includes(key.key_name.web)) {

|

||||

keysToDelete.push(key.key_id)

|

||||

}

|

||||

}

|

||||

})

|

||||

|

||||

if (!keys.hasNextCursor()) {

|

||||

hasNext = false

|

||||

}

|

||||

} while (hasNext)

|

||||

|

||||

const response = await lokaliseApi

|

||||

.keys()

|

||||

.bulk_delete(keysToDelete, { project_id: projectId })

|

||||

|

||||

lokaliseLogger.debug(

|

||||

`Bulk delete successful, removed ${keysToDelete.length} keys`

|

||||

)

|

||||

|

||||

return response

|

||||

} catch (e) {

|

||||

lokaliseLogger.error("Bulk delete failed", e)

|

||||

} finally {

|

||||

performance.mark("bulkDeleteEnd")

|

||||

|

||||

performance.measure(

|

||||

"Bulk delete operation",

|

||||

"bulkDeleteStart",

|

||||

"bulkDeleteEnd"

|

||||

)

|

||||

}

|

||||

}

|

||||

@@ -1,14 +0,0 @@

|

||||

import path from "node:path"

|

||||

|

||||

import { config } from "dotenv"

|

||||

|

||||

config({ path: `${process.cwd()}/.env.local` })

|

||||

|

||||

const filepath = path.resolve(__dirname, "./extracted.json")

|

||||

|

||||

async function main() {

|

||||

const { upload } = await import("./lokalise")

|

||||

await upload(filepath)

|

||||

}

|

||||

|

||||

main()

|

||||

Reference in New Issue

Block a user